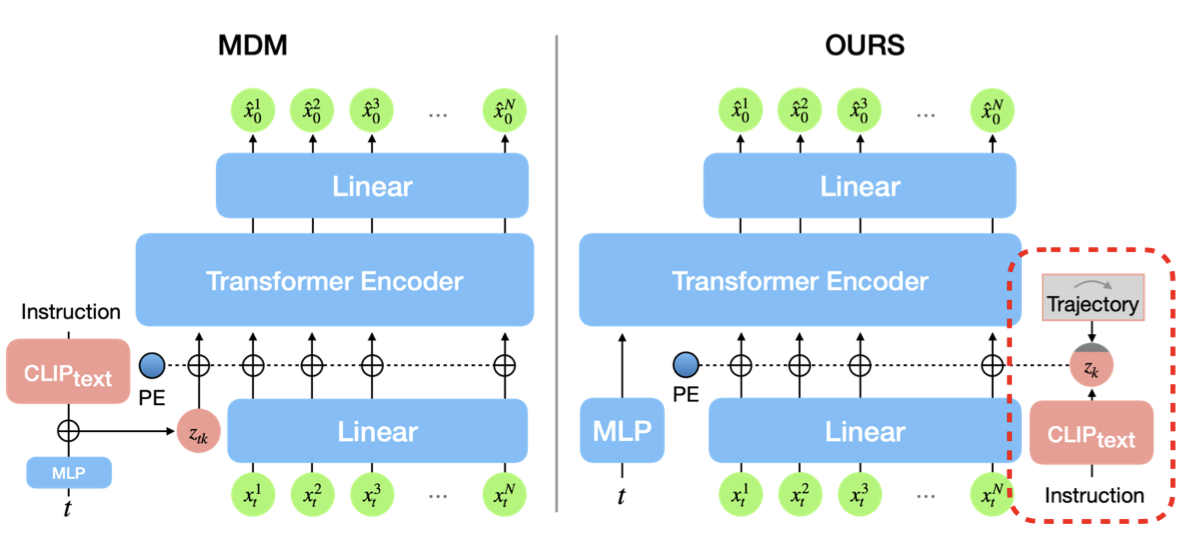

AdaptiveSliders: Semantic Editing

User-aligned Semantic Slider-based Editing of Text-to-Image Model Output. A framework for discovering interpretable directions in latent space, allowing users to fine-tune generative images with simple sliders.

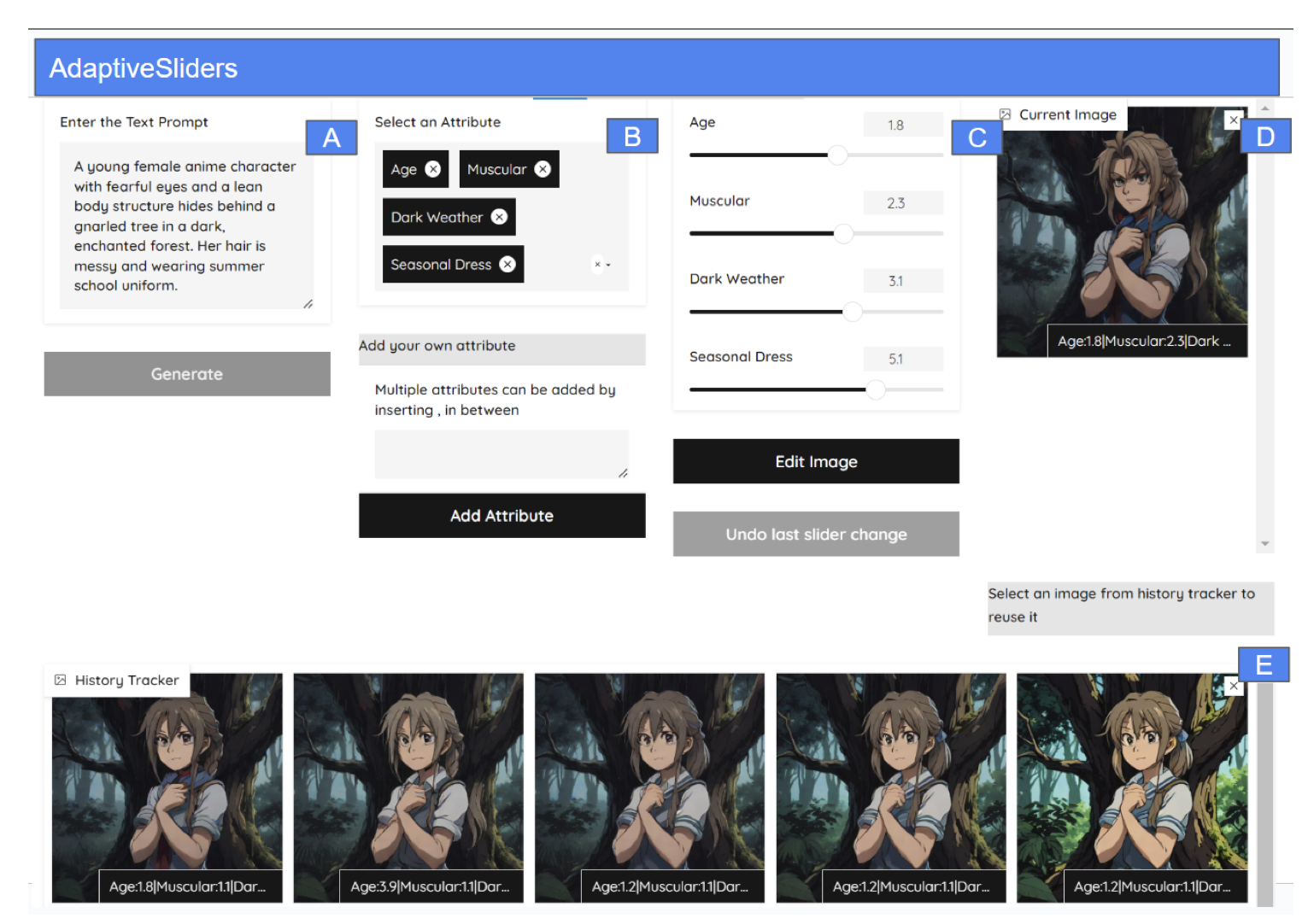

AvaTTAR: Table Tennis Training

Stroke training with embodied and detached visualization in Augmented Reality. We visualize stroke dynamics directly on the user's body to improve motor skill acquisition and spatial understanding.

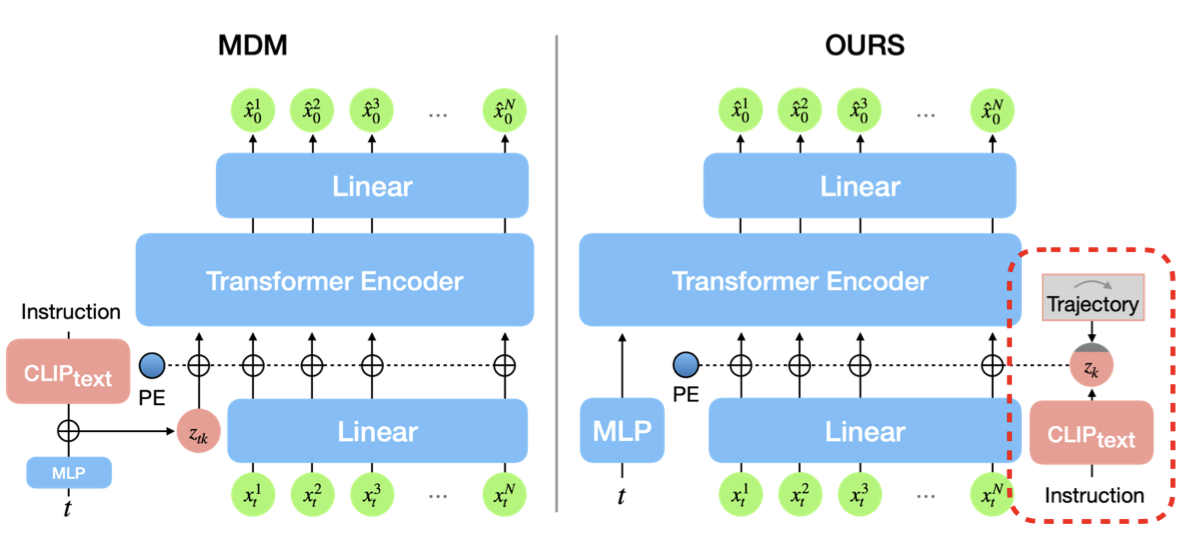

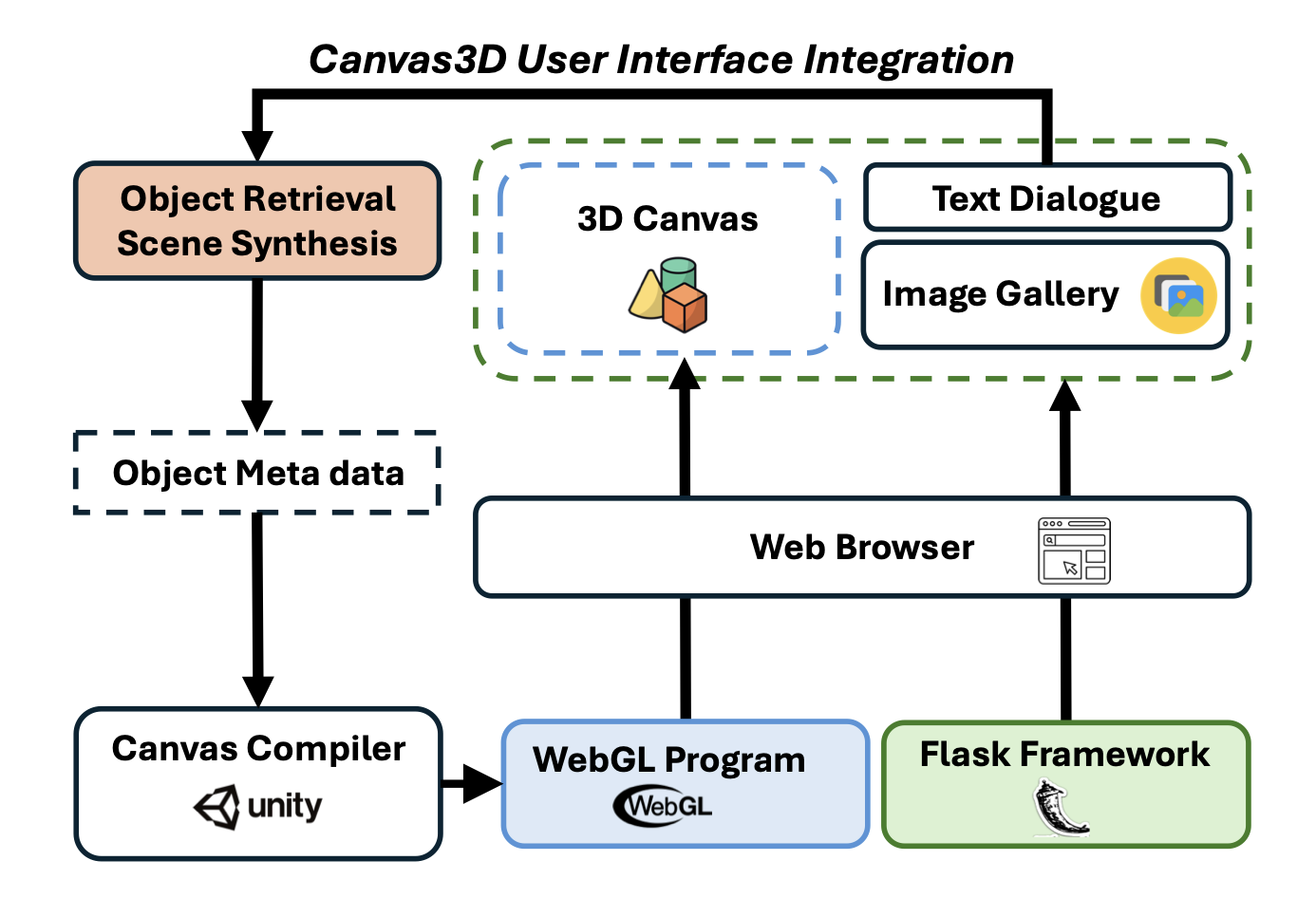

CANVAS-3D: Spatial Control

Empowering Precise Spatial Control for Image Generation with Constraints from a 3D Virtual Canvas. Allowing users to use coarse 3D proxies to guide the composition and layout of generative 2D images.

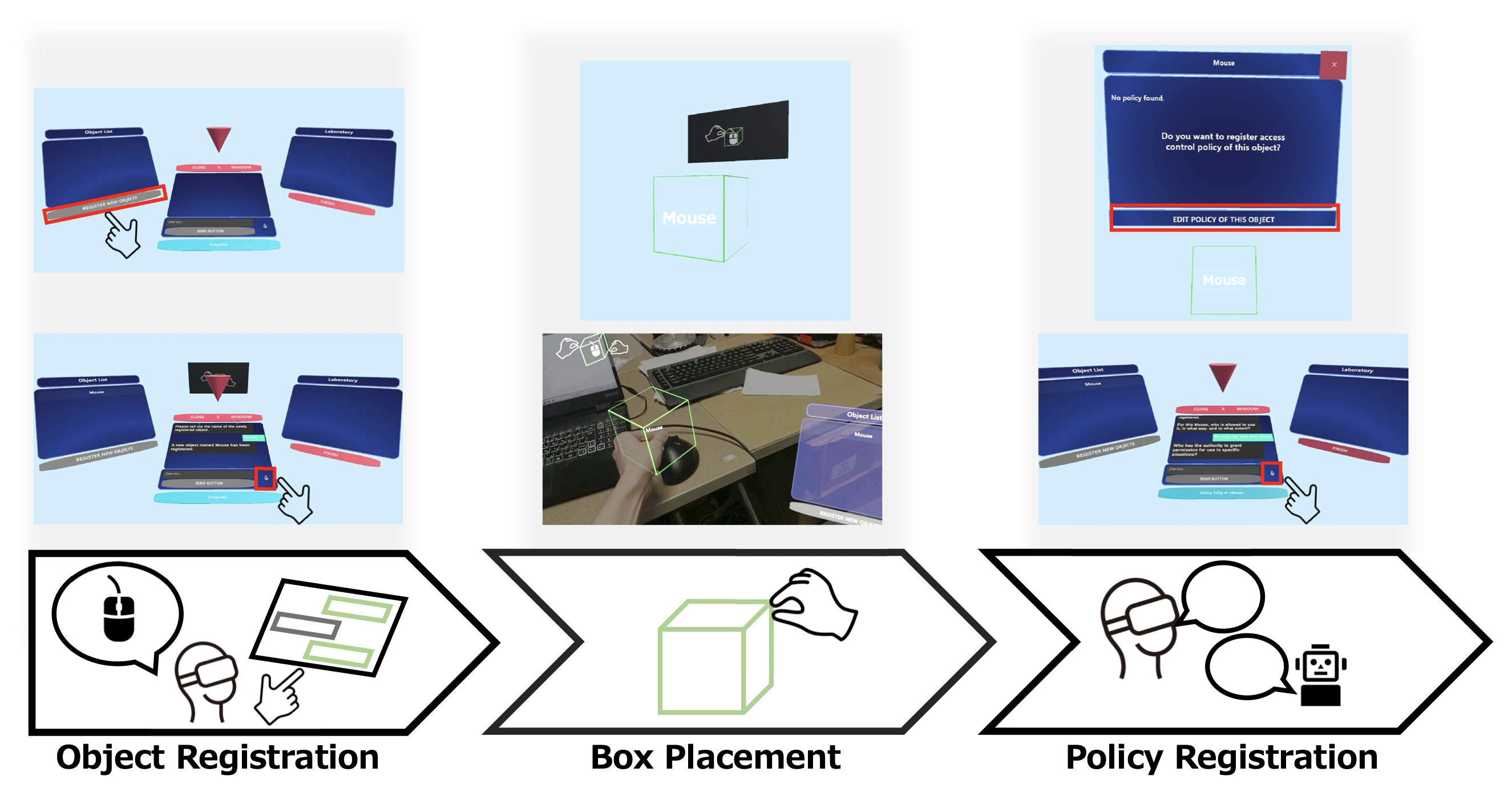

Transparent Barriers

Natural Language Access Control Policies for XR-Enhanced Everyday Objects. Using LLMs to interpret verbal commands and enforce spatial access policies in shared Mixed Reality environments.

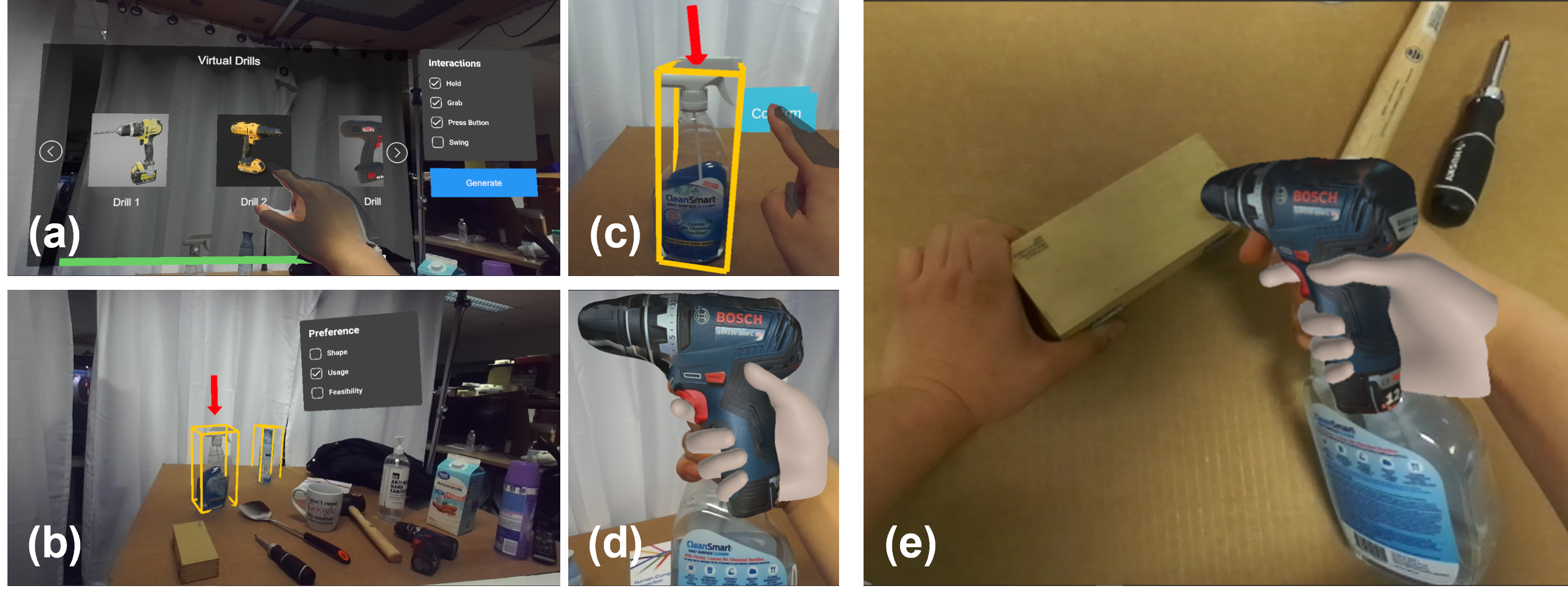

Ubi-TOUCH

Ubiquitous Tangible Object Utilization through Consistent Hand-object interaction in AR. Opportunistically repurposing everyday physical objects as tangible proxies for virtual interactions.